Discover 7422 Tools

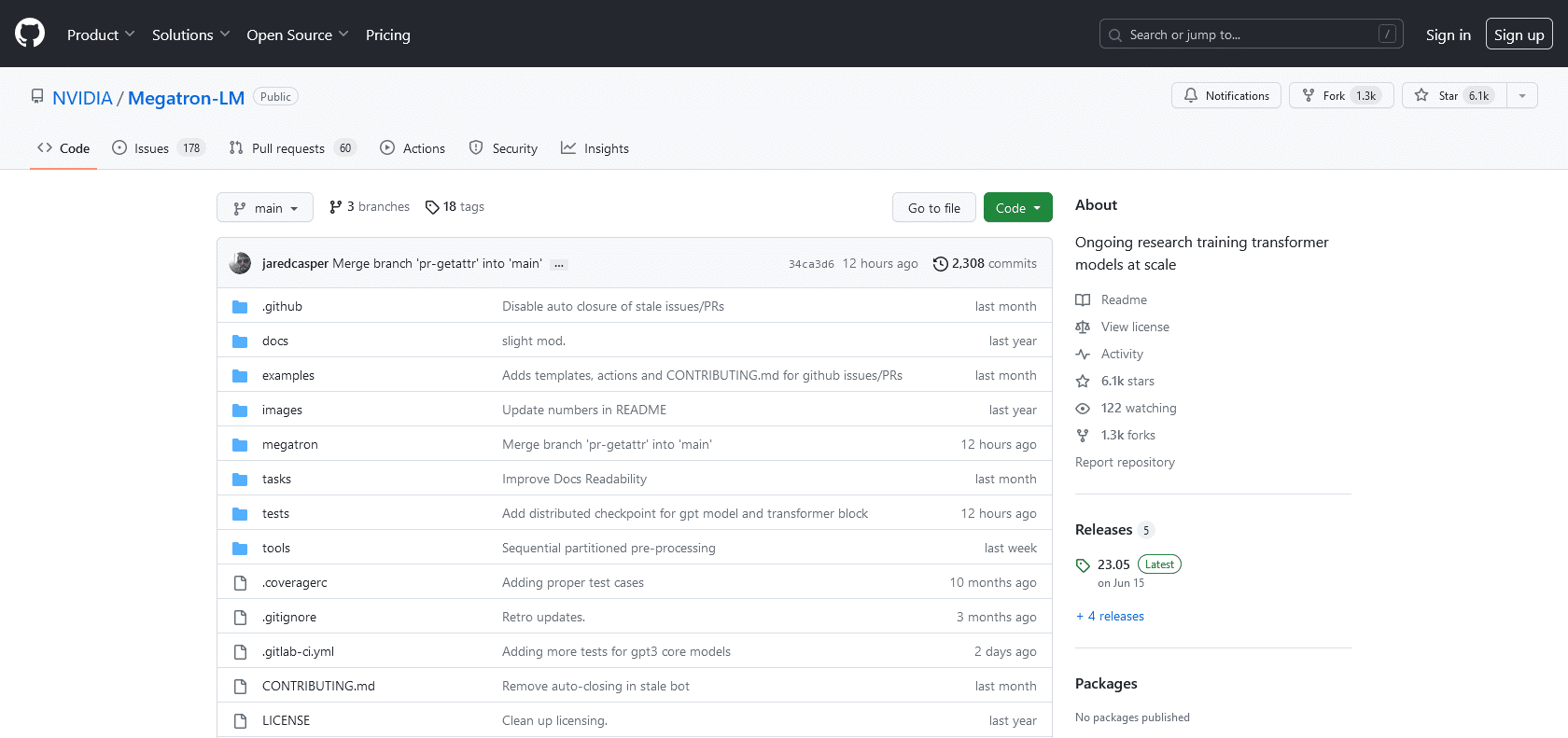

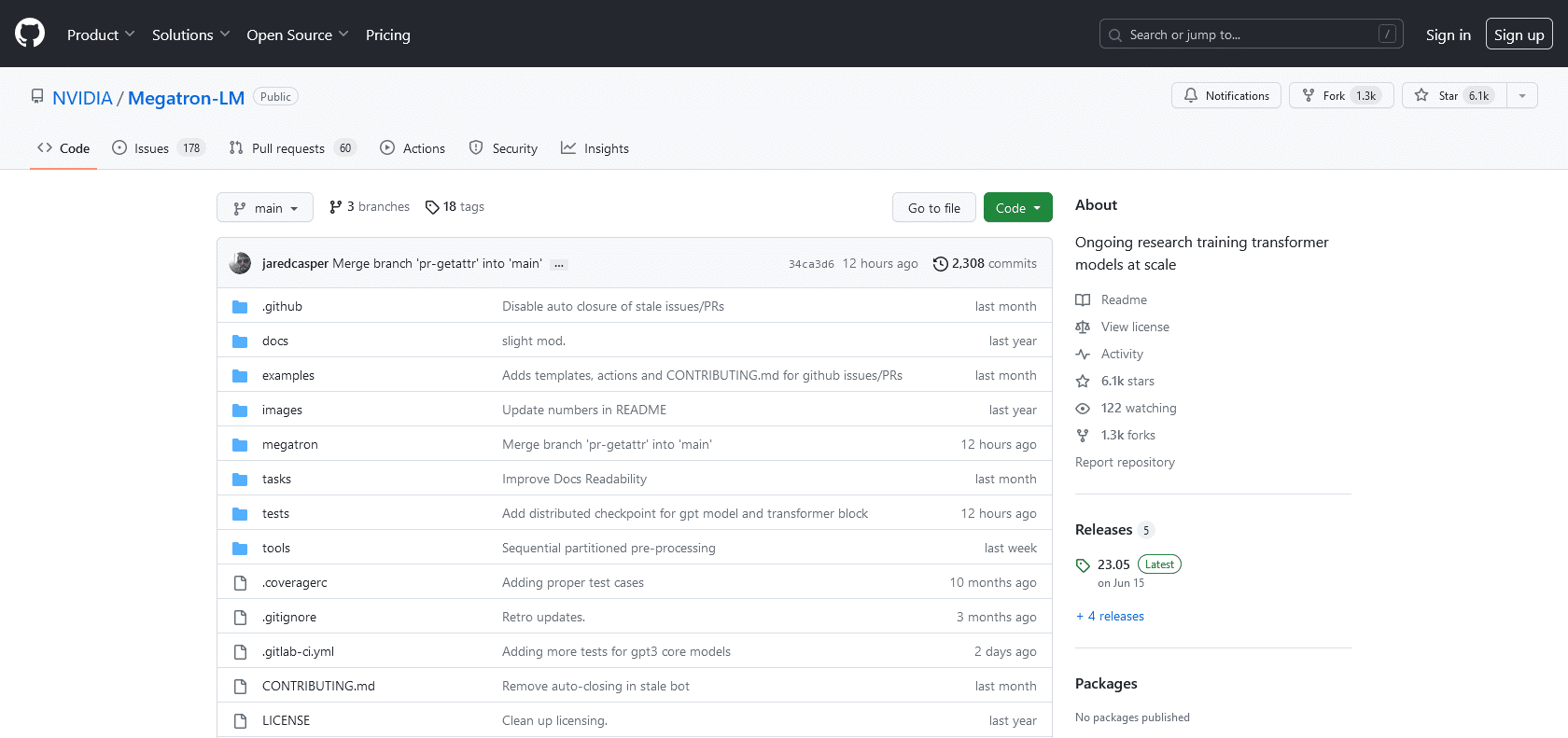

Leverage Megatron-LM: Create, Scale, Optimize

Quickly create large-scale natural language models with Megatron-LM. Scale up to 8 billion parameters for optimal performance, with support and pre-trained models for TensorFlow, PyTorch, and JAX.

Megatron-LM is a game-changing open source library developed by NVIDIA. It empowers developers to effortlessly generate large-scale natural language models. With the aim of streamlining the training and deployment process, Megatron-LM ensures that these models are accessible to developers of all levels. One of its most captivating features is the ability to scale models up to an impressive 8 billion parameters, resulting in state-of-the-art performance with minimum effort. This library offers an extensive toolkit comprising native support for popular frameworks like TensorFlow, PyTorch, and JAX. Additionally, Megatron-LM provides a diverse collection of pre-trained models for various tasks, giving developers a head start. The optimization techniques incorporated into the library, such as adaptive learning rates, distributed data parallelism, and memory efficiency, further enhance the overall model performance. If you're seeking to create and deploy powerful natural language models swiftly and seamlessly, Megatron-LM is the ultimate choice.

Create large-scale, natural language models quickly and easily

Scale models up to over 8 billion parameters

Native support for TensorFlow, PyTorch, and JAX

Pre-trained models and optimization techniques available

Megatron-LM

Join the AI revolution and explore the world of artificial intelligence. Stay connected with us.

Copyright © 2025 AI-ARCHIVE

Today Listed Tools 174

Discover 7422 Tools